FOSDEM 2015

FOSDEM is a yearly event held in Brussels, Belgium around the end of January, beginning of February. Since MistServer has its roots in Open Source, we attended as visitors in 2014. 2014 also happened to be the first year FOSDEM attempted to live stream every single room. We noticed there were some... kinks to work out with their streaming, so later that year we reached out to the FOSDEM organizers and offered our help for 2015. They accepted our help, and after a few months of working out details, a plan was created.

Previously, FOSDEM has been using rented firewire cameras, using DVswitch to manually mix the slides and the camera feed, outputting to files. In 2014, an encoder and streaming service were attached to this setup to create the live streams. Streaming was only planned a short amount of time in advance.

For the 2015 incarnation of FOSDEM, we ended up doing things a little differently. Since quality of the footage has been going up, storing this as raw video has become troublesome. Encoding it in real time to a more compact format is possible, but a challenge as well, especially when the hardware is rented each year. Plus there's so many codecs to choose from! Ideally we would pick an open source codec (because, well, FOSDEM) - which currently basically means you're stuck with VP8, VP9 or Theora. Unfortunately, all of those can currently only be encoded in software, plus there's not that many devices that will play them out of the box. Since we're streaming as well as recording, we decided to go with a format that would play on as many devices as possible, setting aside our ideals in exchange for practicality. We ended up choosing hardware-encoded H.264 video and AAC audio. This combination is currently the industry standard, and practically anything half decent with a screen will play it. These hardware encoders were attached to a rented laptop, which would feed the streams into MistServer for storage and stream-out. Or that was the plan, anyway.

FOSDEM opted to buy the needed hardware instead of continuing to rent the entire chain - reducing the rental fees tremendously. The hardware encoders could handle SD/HD/3G-SDI and HDMI, so firewire was no longer a requirement for the cameras. This is a big deal, as firewire cameras are becoming more and more expensive to rent. Unfortunately, selecting and procuring the hardware took a little longer than expected. The plan was to test a full setup with all the pieces in place by November 2014 - over two months before they would be actually needed. The first time one of the hardware encoders actually got into our hands was after Christmas. The rest of the hardware wasn't even available yet at that time.

The hardware encoder being the most important part of the setup, we proceeded to get that working first and foremost. This turned out to be a challenge. We knew there were no stable/official Linux drivers for these devices, and had planned to create these ourselves. In November. With time running out, we hacked together something that worked about a week and a half later. This was good, because the hard drive image for the rented laptops needed to be delivered to the rental company before mid-January. There were only days to spare, and to make things worse: key people from our team were either on vacation or halfway across the planet at the CES!

We hugely simplified the setup we had envisioned - reducing the setup to practically nothing: we found out the rented laptops had two USB root hubs, one for the left side and another for the right side. We exploited this design and hardcoded the drivers to recognize any encoder plugged into the left side as "camera" and any encoder plugged into the right side as "slides". Simple. There was no time left to code any graphical interface, error checking or anything else. We quickly slapped on a generic desktop, some scripts to enable updating the software over the network, then sent it off to the rental company for copying to all the others. We would work on finalizing the software during the rest of the time before FOSDEM starts, and then update the laptops using the network update capability. A few days before the event, we received access to what was to become the central streaming server and uploaded a simple website to access the streams from.

Fast forward a few more days and it's the Friday afternoon before FOSDEM begins. The delegation from our team that volunteered to be the video workforce arrived at Brussels, midst all the organizing chaos! After some asking around, we found where we needed to be at and were shown the rest of the hardware from the whole chain. Yes, a day in advance. This wasn't really anybodies fault, it simply took that long to procure all the hardware. The last pieces arrived just days before, hastily express-shipped to Belgium. We hooked up one of the laptops with the whole chain of other devices and updated the software on it by hand. The idea was that we would verify this one laptop to work, and then clone that setup to the others over the network. This was a problem however: the physical network wasn't actually set up yet! To make matters even worse: half the software had stopped working correctly on the rental laptops.

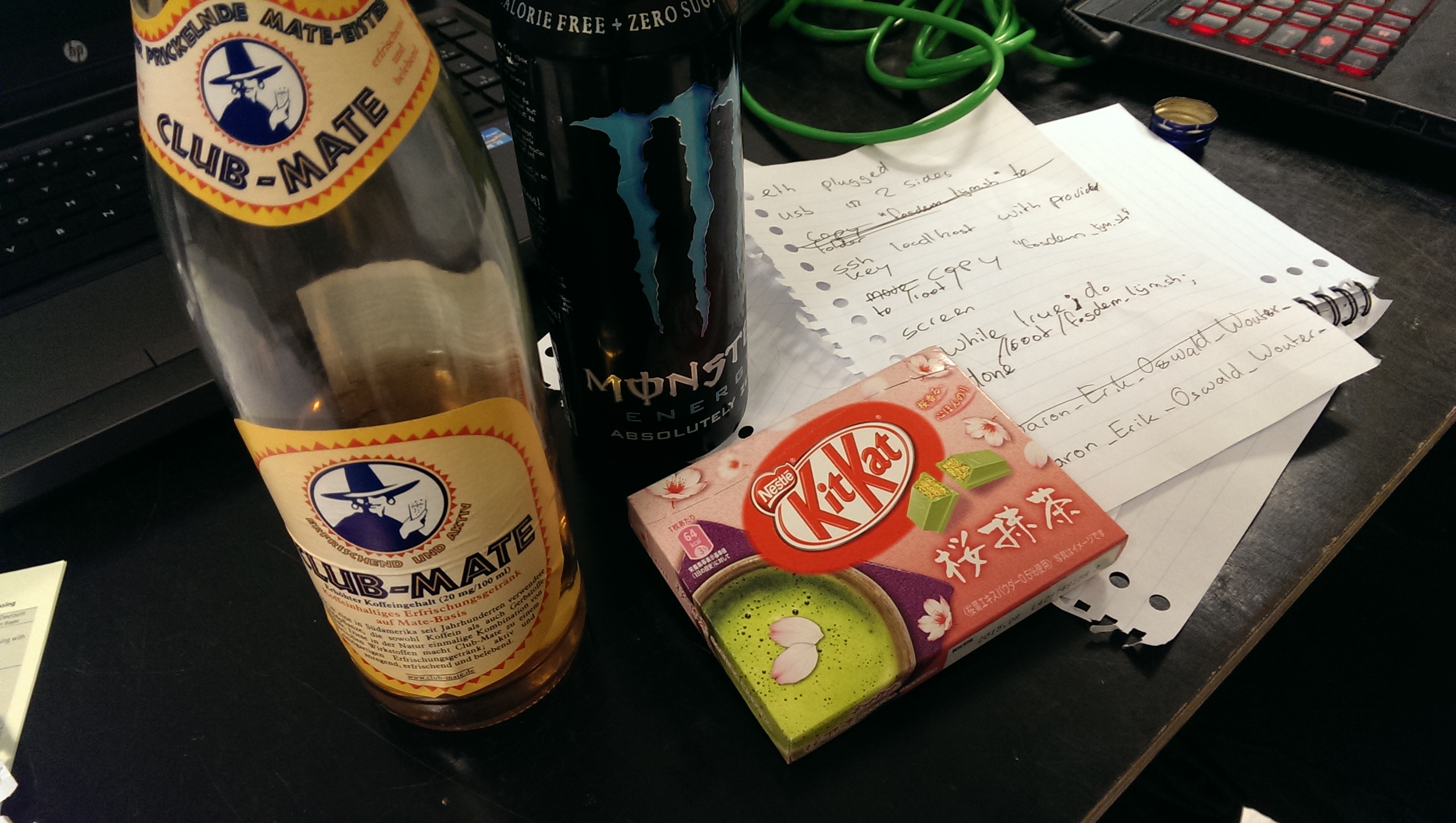

At 21:00 on Friday, this is where we were at: feverishly writing scripts, changing settings, rebooting, hooking things up again and again... with 25 copies of the setup sitting in the background, waiting to be updated for tomorrow. We kept at it vigilantly, and continued to work until late at night before finally returning to our hotel, ready to wake up early in the morning again to finish installing the updated and now fully working software on all the other laptops - on what we hoped would be a working network. The final version that we ended up using worked much as was imagined two weeks earlier: a system service running in the background transparently set up and configured the hardware encoders, detecting which was which based on the side of the laptop they were connected to, then forwarding both streams to a central MistServer install running on a cloud server. If anything were to go wrong, simply re-plugging the devices would completely reset the software.

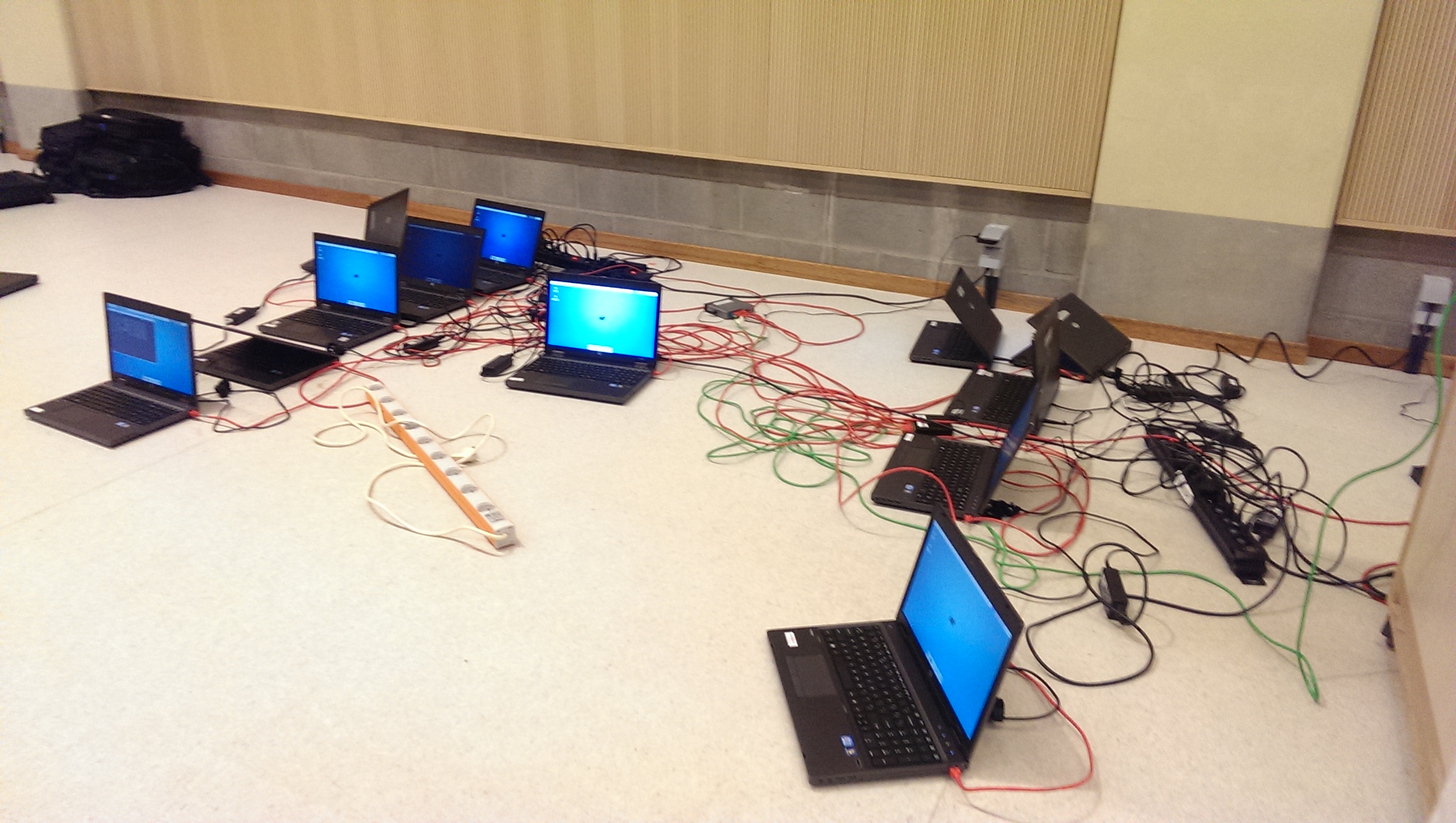

The next morning, the chaos was even worse. There still was no reliable network, so we created a makeshift network of our own using a laptop and a spare switch. We enlisted the help of four random volunteers (I have no idea who these were - if any of you are reading this now: thanks for the help!) to get each of the 25 laptops unpacked, booted, hooked up, updated, named after the room they were going to be used in, labeled, shut down, and packed back up again with the rest of the equipment. Not necessarily in that order. They were being carried out towards the rooms shortly after.

Of course, we discovered a critical bug in the software right after they left the room. Luckily, everyone was instructed to hook up the laptop to the on-site wired network (this was needed for streaming out, though not for recording). The laptops would auto-download software updates on boot, so a patched version was prepared that would auto-install, fixing the problem. Nobody would forget to hook up the laptop to the ethernet, right? Wrong. Most of our Saturday was spend running from room to room, fixing setup issues, plugging in cables, fixing wrong connections, etcetera. It was absolutely crazy, but a lot of fun, too. And we met a lot of interesting people along the way! Apparently some talks were given, too, but I don't remember seeing any of those, myself...

Halfway through the day we took a break for some much needed human fuel and to patch some software and script updates over the network to all the rooms to improve reliability and reduce the potential for human error. Around this time we also learned the reason half the rooms weren't streaming: they had accidentally been patched to a different network VLAN. A couple of seconds of distracting the networking team fixed that, and midway through the day, everything was mostly up and running. Concurrent viewers were climbing steadily, and more and more rooms were coming online as wrong cable connections were getting corrected, camera settings put back to normal, and other various bits and pieces sorted out. We got some time to work on the central streaming server itself later in the day, and got HTTPS enabled - though we were missing the certificates to enable it on all parts of the system, causing it not to work for everyone. We made some requests and arranged for those to be ready the next day.

While most visitors were heading home after the talks of the first day ended, we gathered feedback. It turns out many of the volunteers were frustrated that the laptop provided absolutely no indication if the streams were actually working or not. This, plus some more reliability improvements we came up with, was a reason for us to take an entire setup with us back to the hotel. We continued working on the setup until about 2AM, uploaded the updated version to the central server and finally turned in to bed around 3AM, for about four hours of sleep.

The next morning, everything worked beautifully. Only a few bad cable connections were made, the software updated correctly and there were nearly no problems whatsoever. Almost the entire day, we had 44 streams continuously going live from 22 rooms. We spend most of the day tweaking the system, gathering statistics, and making notes for next year. Around noon we had the remainder of the HTTPS config in place as well. There had been a few complaints about the default streaming mode using the flash plugin (hey, we don't like that thing, either!), and feeling confident about the stability of the system we enabled our experimental live HTML5 MP4 support. This worked very nicely, so we left it enabled for the remainder of the day and gathered a lot of valuable information on its performance in real life scenarios.

When the first talks were coming to an end and equipment was coming back, we realized there had been a small oversight: the recordings were on the rented laptops. Those laptops had to go back to the rental company, so the recordings needed to be copied off right away.

We copied the technique from Friday, and started daisy-chaining network switches together. One of the FOSDEM core team members used the remote access into the laptops to set up file transfers to an off-site server, while we unpacked, hooked up, checked, shut down and repacked all of them. Halfway through, we ran off to the Janson room to receive some applause during the closing talk, then ran back to continue the file transfers. We managed to transfer the over 1TB of encoded video in about 2-3 hours total. We weren't really keeping track how long it took exactly... everything was a blur. Eventually we had a neat stack of laptops ready to go back to the rental place, with all the recordings securely stored for further processing.

Of course, there had been no time to make the recordings anything more than a set of files, room names and time stamps. We created a script to assist with manually labeling each and every recorded talk, so that further scripts could do final encodes as picture-in-picture of the two streams per room, that could be uploaded to the video archives. We did all the labeling, while a FOSDEM core team member ran the final encodes and arranged the final quality checks and uploads. Just over a month later, many videos were ready for watching already; which I believe is a new speed record for FOSDEM. The rest of them will follow as soon as the encodes complete.

So, what's next? We loved working with the people from FOSDEM, and will be taking care of video again next year. It was an amazing experience, setting up a project so large in scale in such an insanely small time span. Since all the core parts of the setup were bought, not rented, we could take a copy of the entire setup home with us. We will be tweaking this setup for the entire year to come and will have it perfected for next time. We were able to get "good enough" working in just two days, so a year worth of time should be enough to get it from "good enough" to "just right". To prevent all the incorrect cable connections next year, most of the hardware will be attached to a board or box of some sort, connected correctly in advance. This also decreases setup time and improves portability. We will also stop using a single laptop for everything, but instead switch to two smaller ARM-based Linux systems, one for each of the streams. There will still be rented laptops, but those will be optional control and monitoring stations, instead. It was nearly impossible to verify correct audio on-site, so we will be adding volume meters as well as other stream status indicators, among other improvements.

We're very excited for next year! We will be giving a talk then instead of just writing a text like this, and since everything will be running much more smoothly next time (right..?), we will be able to see and do more instead of just running around. See you all again next year!

MistServer

MistServer